存储卷介绍 pod有生命周期,生命周期结束后pod里的数据会消失(如配置文件,业务数据等)。

需要将数据与pod分离,将数据放在专门的存储卷上

pod在k8s集群的节点中是可以调度的, 如果pod挂了被调度到另一个节点,那么数据和pod的联系会中断。

需要与集群节点分离的存储系统才能实现数据持久化

简单来说: volume提供了在容器上挂载外部存储的能力

存储卷的分类 kubectl explain pod.spec.volumes或者参考: https://kubernetes.io/docs/concepts/storage/

本地存储卷

emptyDir pod删除,数据也会被清除, 用于数据的临时存储

hostPath 宿主机目录映射(本地存储卷)

网络存储卷

NAS类 nfs等

SAN类 iscsi,FC等

分布式存储 glusterfs,cephfs,rbd,cinder等

云存储 aws,azurefile等

存储卷的选择 按应用角度主要分为三类:

文件存储 如:nfs,glusterfs,cephfs等

优点: 数据共享(多pod挂载可以同读同写)

缺点: 性能较差

块存储 如: iscsi,rbd等

优点: 性能相对于文件存储好

缺点: 不能实现数据共享(部分)

对象存储 如: ceph对象存储

优点: 性能好, 数据共享

缺点: 使用方式特殊,支持较少

本地存储卷–emptyDir

应用场景

实现pod内容器之间数据共享

特点

随着pod被删除,该卷也会被删除

创建yaml文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root@k8s-master1 ~] apiVersion: v1 kind: Pod metadata: name: volume-emptydir spec: containers: - name: write image: centos imagePullPolicy: IfNotPresent command : ["bash" ,"-c" ,"echo haha > /data/1.txt ; sleep 6000" ] volumeMounts: - name: data mountPath: /data - name: read image: centos imagePullPolicy: IfNotPresent command : ["bash" ,"-c" ,"cat /data/1.txt; sleep 6000" ] volumeMounts: - name: data mountPath: /data volumes: - name: data emptyDir: {}

基于yaml文件创建pod 1 2 [root@k8s-master1 ~] pod/volume-emptydir created

查看pod启动情况 1 2 3 [root@k8s-master1 ~] NAME READY STATUS RESTARTS AGE volume-emptydir 2/2 Running 0 15s

查看pod描述信息 1 2 3 4 5 6 7 8 9 10 11 12 [root@k8s-master1 ~] Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 50s default-scheduler Successfully assigned default/volume-emptydir to k8s-worker1 Normal Pulling 50s kubelet Pulling image "centos:centos7" Normal Pulled 28s kubelet Successfully pulled image "centos:centos7" in 21.544912361s Normal Created 28s kubelet Created container write Normal Started 28s kubelet Started container write Normal Pulled 28s kubelet Container image "centos:centos7" already present on machine Normal Created 28s kubelet Created container read Normal Started 28s kubelet Started container read

验证 1 2 3 4 [root@k8s-master1 ~] [root@k8s-master1 ~] haha

本地存储卷–hostPath

创建yaml文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@k8s-master1 ~] apiVersion: v1 kind: Pod metadata: name: volume-hostpath spec: containers: - name: busybox image: busybox imagePullPolicy: IfNotPresent command : ["/bin/sh" ,"-c" ,"echo haha > /data/1.txt ; sleep 600" ] volumeMounts: - name: data mountPath: /data volumes: - name: data hostPath: path: /opt type : Directory

基于yaml文件创建pod 1 2 [root@k8s-master1 ~] pod/volume-hostpath created

查看pod状态 1 2 3 [root@k8s-master1 ~] volume-hostpath 1/1 Running 0 29s 10.224.194.120 k8s-worker1 <none> <none>

验证pod所在机器上的挂载文件 1 2 [root@k8s-worker1 ~] haha

网络存储卷–nfs 搭建nfs服务器 1 2 3 4 5 [root@nfsserver ~] [root@nfsserver ~] /data/nfs *(rw,no_root_squash,sync) [root@nfsserver ~] [root@nfsserver ~]

所有node节点安装nfs客户端相关软件包 1 2 [root@k8s-worker1 ~] [root@k8s-worker2 ~]

验证nfs可用性 1 2 3 4 5 6 7 [root@node1 ~] Export list for 192.168.10.129: /data/nfs * [root@node2 ~] Export list for 192.168.10.129: /data/nfs *

master节点上创建yaml文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 [root@k8s-master1 ~] apiVersion: apps/v1 kind: Deployment metadata: name: volume-nfs spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.15-alpine imagePullPolicy: IfNotPresent volumeMounts: - name: documentroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: documentroot nfs: server: 192.168.10.129 path: /data/nfs

应用yaml创建 1 2 [root@k8s-master1 ~] deployment.apps/nginx-deployment created

在nfs服务器共享目录中创建验证文件 验证pod 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@k8s-master1 ~] volume-nfs-649d848b57-qg4bz 1/1 Running 0 10s volume-nfs-649d848b57-wrnpn 1/1 Running 0 10s [root@k8s-master1 ~] / index.html / volume-nfs / [root@k8s-master1 ~] / index.html / volume-nfs /

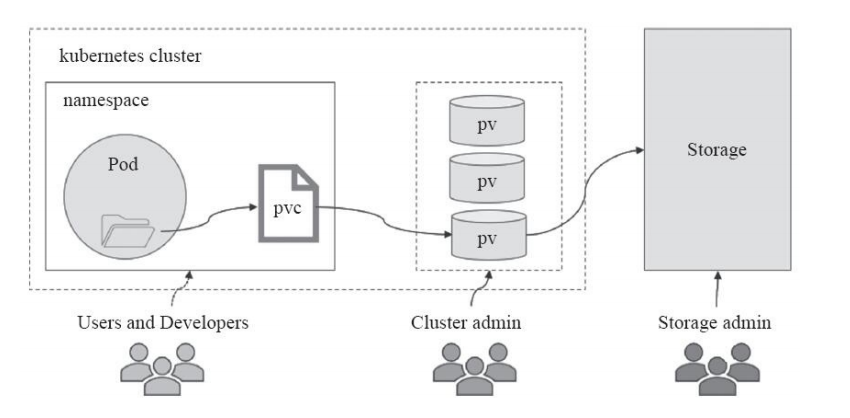

PV(持久存储卷)与PVC(持久存储卷声明) persistenvolume(PV )

PV是配置好的一段存储(可以是任意类型的存储卷)

也就是说将网络存储共享出来,配置定义成PV。

PersistentVolumeClaim(PVC )

pv与pvc之间的关系

pv提供存储资源(生产者)

pvc使用存储资源(消费者)

使用pvc绑定pv

实现nfs类型pv与pvc 编写创建pv的YAML文件 1 2 3 4 5 6 7 8 9 10 11 12 13 [root@k8s-master1 ~] apiVersion: v1 kind: PersistentVolume metadata: name: pv-nfs spec: capacity: storage: 1Gi accessModes: - ReadWriteMany nfs: path: /data/nfs server: 192.168.10.129

访问模式有3种 参考: https://kubernetes.io/docs/concepts/storage/persistent-volumes/#access-modes

ReadWriteOnce 单节点读写挂载

ReadOnlyMany 多节点只读挂载

ReadWriteMany 多节点读写挂载

创建pv并验证 1 2 3 4 5 6 [root@k8s-master1 ~] persistentvolume/pv-nfs created [root@k8s-master1 ~] NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv-nfs 1Gi RWX Retain Available 81s

RWX为ReadWriteMany的简写

Retain是回收策略

编写创建pvc的YAML文件 1 2 3 4 5 6 7 8 9 10 11 [root@k8s-master1 ~] apiVersion: v1 kind: PersistentVolumeClaim metadata: name: pvc-nfs spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi

创建pvc并验证 1 2 3 4 5 6 [root@k8s-master1 ~] persistentvolumeclaim/pvc-nfs created [root@k8s-master1 ~] NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE pvc-nfs Bound pv-nfs 1Gi RWX 38s

注意: STATUS必须为Bound状态(Bound状态表示pvc与pv绑定OK)

编写deployment的YMAL 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@k8s-master1 ~] apiVersion: apps/v1 kind: Deployment metadata: name: deploy-nginx-nfs spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.15-alpine imagePullPolicy: IfNotPresent ports: - containerPort: 80 volumeMounts: - name: www mountPath: /usr/share/nginx/html volumes: - name: www persistentVolumeClaim: claimName: pvc-nfs

应用YAML创建deploment 1 2 [root@k8s-master1 ~] deployment.apps/deploy-nginx-nfs created

验证pod 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@k8s-master1 ~] deploy-nginx-nfs-6f9bc4546c-gbzcl 1/1 Running 0 1m46s deploy-nginx-nfs-6f9bc4546c-hp4cv 1/1 Running 0 1m46s [root@k8s-master1 ~] / index.html / volume-nfs / [root@k8s-master1 ~] / index.html / volume-nfs /

存储的动态供给 每次使用存储要先创建pv, 再创建pvc,真累! 所以我们可以实现使用存储的动态供给特性。

静态存储需要用户申请PVC时保证容量和读写类型与预置PV的容量及读写类型完全匹配, 而动态存储则无需如此.

管理员无需预先创建大量的PV作为存储资源

Kubernetes从1.4版起引入了一个新的资源对象StorageClass,可用于将存储资源定义为具有显著特性的类(Class)而不是具体

的PV。用户通过PVC直接向意向的类别发出申请,匹配由管理员事先创建的PV,或者由其按需为用户动态创建PV,这样就免去

了需要先创建PV的过程。

使用NFS文件系统创建存储动态供给 PV对存储系统的支持可通过其插件来实现,目前,Kubernetes支持如下类型的插件。

官方地址:https://kubernetes.io/docs/concepts/storage/storage-classes/

官方插件是不支持NFS动态供给的,但是我们可以用第三方的插件来实现

第三方插件地址: https://github.com/kubernetes-retired/external-storage

下载并创建storageclass 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [root@k8s-master1 ~] [root@k8s-master1 ~] [root@k8s-master1 ~] apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-client provisioner: k8s-sigs.io/nfs-subdir-external-provisioner parameters: archiveOnDelete: "false" [root@k8s-master1 ~] storageclass.storage.k8s.io/managed-nfs-storage created [root@k8s-master1 ~] NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 10s

下载并创建rbac 因为storage自动创建pv需要经过kube-apiserver,所以需要授权。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 [root@k8s-master1 ~] [root@k8s-master1 ~] [root@k8s-master1 ~] apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: ["" ] resources: ["persistentvolumes" ] verbs: ["get" , "list" , "watch" , "create" , "delete" ] - apiGroups: ["" ] resources: ["persistentvolumeclaims" ] verbs: ["get" , "list" , "watch" , "update" ] - apiGroups: ["storage.k8s.io" ] resources: ["storageclasses" ] verbs: ["get" , "list" , "watch" ] - apiGroups: ["" ] resources: ["events" ] verbs: ["create" , "update" , "patch" ] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner namespace: default rules: - apiGroups: ["" ] resources: ["endpoints" ] verbs: ["get" , "list" , "watch" , "create" , "update" , "patch" ] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io [root@k8s-master1 ~] serviceaccount/nfs-client-provisioner created clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

创建动态供给的deployment 需要一个deployment来专门实现pv与pvc的自动创建

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 [root@k8s-master1 ~] apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type : Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccount: nfs-client-provisioner containers: - name: nfs-client-provisioner image: registry.cn-beijing.aliyuncs.com/pylixm/nfs-subdir-external-provisioner:v4.0.0 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner - name: NFS_SERVER value: 192.168.10.129 - name: NFS_PATH value: /data/nfs volumes: - name: nfs-client-root nfs: server: 192.168.10.129 path: /data/nfs [root@k8s-master1 ~] deployment.apps/nfs-client-provisioner created [root@k8s-master1 ~] nfs-client-provisioner-5b5ddcd6c8-b6zbq 1/1 Running 0 34s

测试存储动态供给是否可用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 --- apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: ports: - port: 80 name: web clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: selector: matchLabels: app: nginx serviceName: "nginx" replicas: 2 template: metadata: labels: app: nginx spec: imagePullSecrets: - name: huoban-harbor terminationGracePeriodSeconds: 10 containers: - name: nginx image: nginx:latest ports: - containerPort: 80 name: web volumeMounts: - name: www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: www spec: accessModes: [ "ReadWriteOnce" ] storageClassName: "nfs-client" resources: requests: storage: 1Gi [root@k8s-master1 nfs] NAME READY STATUS RESTARTS AGE nfs-client-provisioner-9c988bc46-pr55n 1/1 Running 0 95s web-0 1/1 Running 0 95s web-1 1/1 Running 0 61s [root@nfsserver ~] default-www-web-0-pvc-c4f7aeb0-6ee9-447f-a893-821774b8d11f default-www-web-1-pvc-8b8a4d3d-f75f-43af-8387-b7073d07ec01

批量下载文件:https://raw.githubusercontent.com/kubernetes-incubator/external-storage/master/nfs-client/deploy/$file ; done